What is Split Testing? The Marketer’s #1 Ultimate Guide

- Last Updated February 4, 2022

Online marketing is full of unknowns.

Every offer is different, every brand is unique, and every target market has its quirks.

What works for “ their” business might not work for yours — the opposite is also true.

That’s where split testing comes in.

Split testing allows you to methodically determine the best way to advertise, market, and sell online for your specific business.

In this guide, we’ll explain what split testing is, how it works, some problems you should be aware of, the top software for split testing, and 9 steps to creating a meaningful split test.

Let’s get started.

Table of Contents

What is Split Testing?

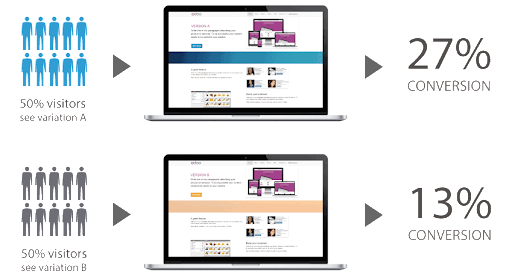

Split testing (also sometimes referred to as A/B testing or multivariate testing) is a method by which online marketers create controlled experiments to determine the effectiveness of various elements of a marketing asset — sales copy, images, graphics, CTA, and so forth.

It’s called split testing because the marketer “splits” their web traffic equally between one or more variations of a single marketing asset (website, landing page, advertisement, email, or something else)…

… and then tests to determine which variation drives the most conversions.

The marketer can then utilize only the winning variation or continue testing to optimize further.

Here’s some split testing jargon you’ll need to understand before we dive deeper:

Conversion — A conversion refers to a specific goal being accomplished (e.g., opt-in or purchase). Conversion rate , then, refers to the percentage of visitors who ‘converted.’

Conversion Optimization — This is a marketing term that refers to the process of improving marketing assets to convert as effectively as possible. Split testing is part of the conversion optimization process.

Control / Variant — In split testing, the ‘control’ is the original version of the marketing asset. And the ‘variant’ is the new version that you hypothesize will convert better.

Multivariate Testing — Multivariate (or multivariable) testing refers to a more complex version of split testing where multiple variables are tested at once to determine which combination of variables performs the best.

A/B Test — A/B testing is another name for split testing, referring to the “A” and “B” page variations in split tests.

Now you know what split testing is — and you understand the related jargon.

So why split test?

The Benefits of Split Testing for Online Marketers

The benefit of split testing is simple.

It allows online marketers to optimize their sales funnels to get more conversions, generate more leads, and make more sales.

Let’s look at an example of split testing in action.

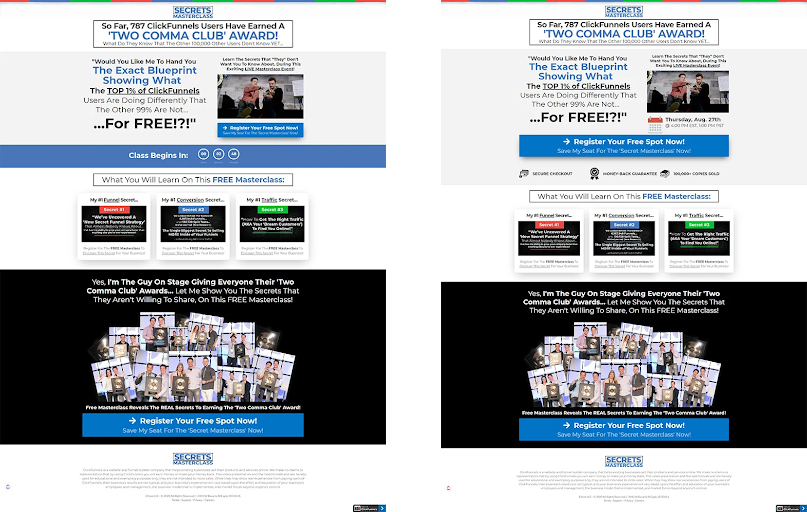

ClickFunnels once tested its “Top 1% Secrets Masterclass” Webinar to determine if adding credibility badges instead of a countdown timer would make a difference.

Here is the control version (with the countdown timer) on the left, while on the right is the variation (with the credibility badges).

Not a big change, right?

Maybe even too small a change to make an impact.

Nope.

Here are the results.

In this case, the control version with the countdown timer won by a long shot — 30% conversion rate vs. an 11% conversion rate.

So ClickFunnels learned that they should keep the countdown timer on their landing page.

(To be clear, this lesson does not apply to every landing page… only to this specific experiment. More on that distinction later)

That’s the value of split testing.

You can learn about what works and what doesn’t work for a specific sales funnel or marketing campaign.

5 Marketing Assets You Can Split Test (With Examples)

Now let’s talk about some of the marketing assets you might consider split testing.

1. Advertisements:

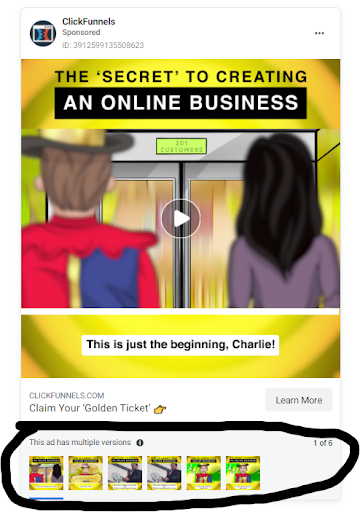

Virtually every advertising platform — Facebook, Google, Pinterest, LinkedIn, Twitter, etc. — gives marketers the ability to split test their advertisements.

You can test the…

- Copy

- CTA

- Image

- Video

- Targeting

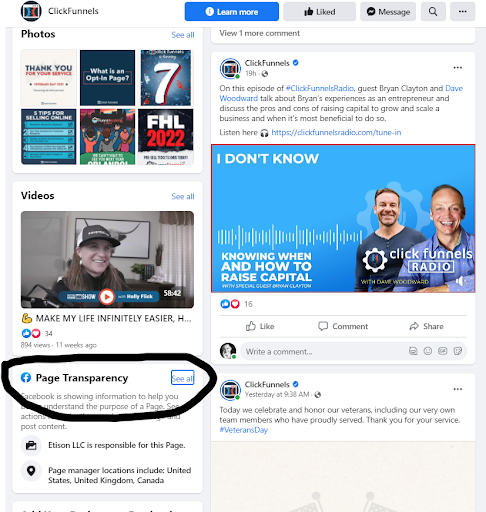

In fact, you can view the tests that any page is running on Facebook by going to the “Page Transparency” section.

For example, in the ClickFunnels ad library, you can see that they’re running 6 variations of this one advertisement…

If you’re looking for a good starting place, look at what your competitors are testing with their Facebook ads — that should give you some ideas.

2. Landing Pages:

Landing pages are ripe with split testing potential.

You can test…

- Copy

- Layout

- Images

- Videos

- Countdown Timers

- Testimonials

- CTA

- Color

And just about anything else on the landing page.

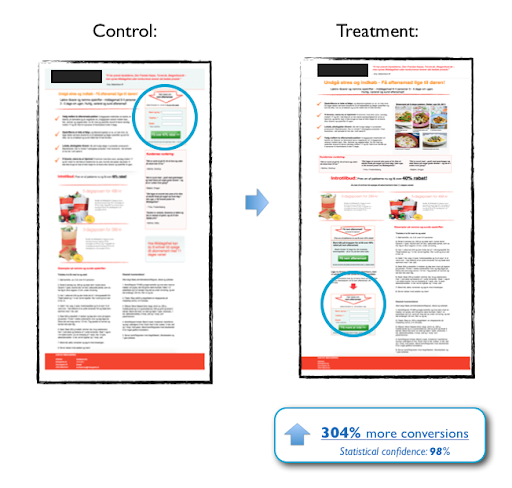

Here’s an example of a landing page split test run by Michael Aagaard of Content Verve. In their case, moving the CTA below the fold increased conversions by 304%.

Landing pages are ripe with split testing potential.

You can test…

- Copy

- Layout

- Images

- Videos

- Countdown Timers

- Testimonials

- CTA

- Color

And just about anything else on the landing page.

Here’s an example of a landing page split test run by Michael Aagaard of Content Verve. In their case, moving the CTA below the fold increased conversions by 304%.

3. Checkout Process:

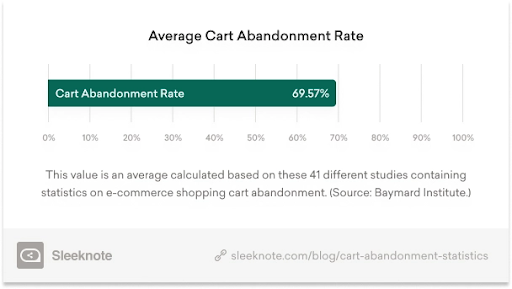

According to Baymard Institute’s research , the average cart abandonment rate — meaning people abandon the items in their online shopping cart without finishing their purchase — hovers just under 70%.

And so, the checkout process is another asset that’s ripe with split testing potential.

You can test…

- Copy

- Countdown Timers

- Order Bumps

- CTA

- Flow

- Credibility Badges

- Testimonials

- Loading Bars

Here’s an example where PayU increased its conversion rate by 5.8% by asking only for the user’s mobile phone number…

4. Upsells:

You can also split test the effectiveness of your upsells and your one-time-offer pages.

Consider…

- The Offer

- The Headline

- The CTA

- The Price Framing

- Testimonials

- Loading Bar

- Credibility Badges

- Sales Copy

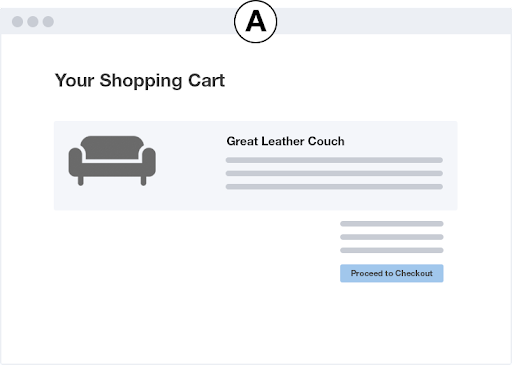

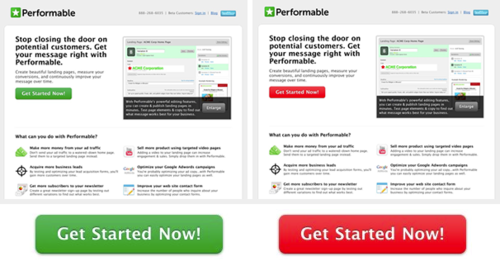

In an example, Growth Rock added an upsell to their checkout process. Here’s the control.

And here’s the variation.

According to their report, the average order value (AOV) increased by $55, with 92% statistical significance. Not bad, eh?

5. Emails:

Email marketing is another area where you can split test and optimize. Consider…

- Subject Line

- Preheader

- Sender Name

- Sender Profile Pic

- Email Copy

- CTA

The email newsletter with over one million subscribers, Morning Brew, famously used split testing to ensure their subject lines always got as many opens as possible.

Here’s how Aweber explains it…

- The Morning Brew team tests 4 subject lines every morning. These subject lines are based on catchy, interesting phrases from the news of the day.

- They send each subject line to 3% of their audience at 5 a.m. (12% in total)

- The winning subject line gets sent to the remaining 88% of their subscribers one hour later.

The Problems With Split Testing That No One Talks About

Up until now, you might have the impression that split testing is super simple and extremely useful for increasing conversions.

That’s partly true.

But there’s a dark side to split testing as well — three problems, in particular.

1. Statistical Significance:

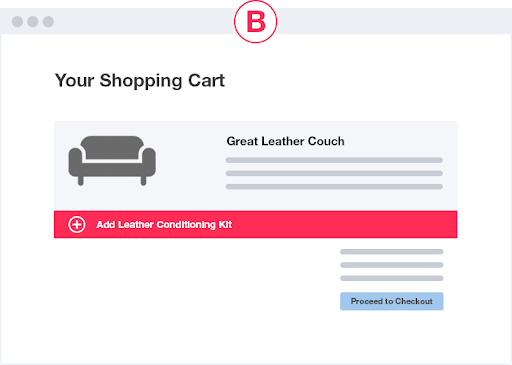

Imagine that you’re running a split test, and you drive 200 random visits to each variation of a landing page.

Version A gets 50 conversions, and version B gets 40 conversions.

That’s relatively compelling, right?

You might even be tempted to get rid of Version B altogether and opt for Version A instead. But according to statistics — which, unfortunately, we marketers aren’t immune to — you can only be 54.52% confident that that difference is real (which isn’t much better than a coin flip).

In other words, there’s almost an equal chance of your results being random as they are due to page variations.

Statistical significance is a big problem with many online split testing claims.

Marketers get excited and don’t consider the real statistical significance of the test they’ve run — then their results get shared even though the data is faulty.

Fortunately, there are a lot of great free online tools to help you determine the significance of your split testing results.

Here’s one from Survey Monkey .

2. Conversion Attribution:

Marketers aren’t scientists.

And where peer-reviewed scientific studies are ruthless about eliminating confounding factors, marketers sometimes change lots of different things on the variation all at once, see an improvement, and then claim that one specific change made the difference.

But if they changed multiple things at once, it’s impossible to know what element is responsible for increased conversion.

Fortunately, this isn’t that big of a deal for marketers. If the conversion rate improved, do you really care how it happened?

Most don’t.

The important thing is that you saw an improvement.

But you do need to know that other marketers’ split testing claims might not be as clear-cut as they seem.

3. Specific Made General:

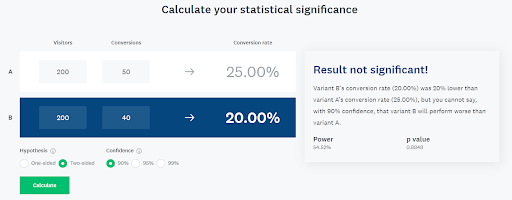

Here’s a famous test that has been tossed around online by marketers ad nauseam — the red button outperformed the green button by 21%.

Does that matter?

No.

No it doesn’t — because while it might say something about that specific offer (assuming it’s not a fluke), that specific landing page, and that specific traffic… it says nothing about your business or your marketing assets.

And that’s a problem in the split testing world.

Many marketers flock to Google looking for split tests that prove one element works better than another element. But it’s not as simple as that.

Some changes will perform better on mobile, but worse on desktop. The offer makes a difference, too. So do the brand, the traffic, the advertisement, and many other factors.

That is to say, what works for one sales funnel very well might not work for another.

Top 3 Split Testing Software for Optimizing Online Assets

Obviously, split testing isn’t as easy as creating two different versions of your landing pages and hoping for the best.

When running split tests on your own site, you need software that can randomize traffic equally, monitor conversion rates for each, and deliver results.

Here are three of the best to consider.

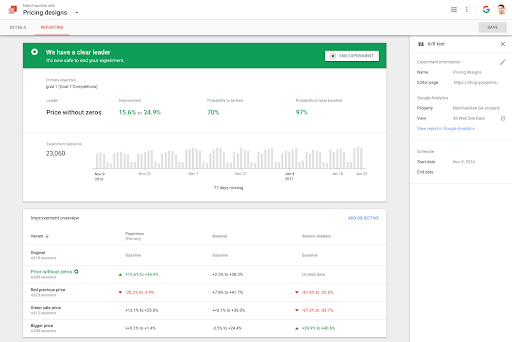

Google Optimize is a free tool that allows you to run split tests on your website. Features include A/B testing, multivariate testing, split URL testing, a visual editor, advanced reporting, and integrations with many other software.

VWO is an A/B testing tool used by thousands of brands across the globe, including Ubisoft, AMD, Teamleader, and many others. It’s an extensive tool with lots of different features. If you want to get deep into split testing and have the bandwidth to do so, then this is the tool for you.

Crazy Egg is software made by Neil Patel that allows users to create heatmaps, snapshots, and recordings of website pages and run split tests. It’s a super user-friendly tool that is great for beginners and relatively inexpensive.

9 Steps To Create a Split Test That’s Actually Useful

Whew!

You now know what split testing is, why it’s beneficial, some of the pitfalls involved, assets that are worth testing, and software that’s worth using.

Now let’s dive into our 9-step process for creating meaningful split tests.

1. Take Stock of Bandwidth:

Creating a good quality split test sounds simple.

But once you get into it, things can get complicated — creating new graphics, writing new copy, building new elements, and so forth.

It requires time, effort, and good communication.

So it’s important to be realistic about your and/or your team’s bandwidth for split testing — the more bandwidth you have, the more split tests you can run and the more you can learn.

However, if your bandwidth for split testing is low, don’t worry so much about determining the exact cause of conversion improvements or achieving 95%+ statistical significance. Instead, do the best you can with what you have.

There’s always an opportunity to optimize your sales funnel. This step is about setting realistic expectations for what you and your team are capable of.

2. Understand Why You Want to Split Test:

Why do you want to run a split test?

Split testing for the sake of split testing isn’t always a good idea — especially if you’re working with limited bandwidth.

So make your split tests count.

Obviously, you want to run split tests to improve conversion rate, open rate, click-through rate, or some other metric.

But ask yourself this question: is the sales funnel already converting at an acceptable and profitable rate?

If it is, then put your split testing budget elsewhere.

And rather than randomly guessing which elements of a sales funnel you should split test, run heat maps and visitor recordings to figure out how people are engaging — what they’re clicking, where they’re looking, and when they’re dropping off.

That’ll give you a good idea of which elements actually need to be tested and optimized.

3. Create a Hypothesis:

Once you’ve got a good reason for testing a specific part of your sales funnel, then it’s time to create a hypothesis.

You’re trying to find a solution to a problem — a guess as to what might perform better than the current version.

You might hypothesize that adding a countdown timer will increase conversion rate via urgency and FOMO. Or you might hypothesize that using different testimonials will provide more compelling social proof.

Make sure you know what your hypothesis is before you run your test.

And if you’re struggling to come up with solutions worth testing, look at what your competitors are doing.

- What are they doing differently than you?

- What stands out?

- Assuming they have a better conversion rate, why might that be?

Chat with your team, examine the evidence from past tests and CRO audits, and make an educated guess. Then move on to the next step!

4. Determine Necessary Sample Size (and Budget):

As much as possible, you want to avoid running tests that generate insignificant results.

I once spoke with Jessie Valle, the Director of Marketing at ClickFunnels, about how interesting a particular A/B test they’d run was. She replied,

“It is very interesting, but what’s more interesting is that way more tests are null or fail completely. Not many winners, unfortunately.”

To some degree, that’s just the name of the game.

The lesson here is to increase your chances of collecting meaningful results. Do this by determining your necessary sample size and budget before you get started.

Use this calculator and work the math backward.

Enter numbers based on how you think the test will perform — and see what the statistical significance looks like. If you’re over 90%, then you’re in pretty good shape.

Also guesstimate how much it’ll cost to drive the necessary amount of traffic to determine your budget.

This isn’t a sure-fire solution, of course… but it’ll give you a better chance of success.

5. Create Multiple Versions:

You’ve made your educated guess and determined the necessary sample size and budget.

Now it’s time to create your different versions.

The purist way to do this is to change only one element on the page, such as the headline, the CTA, or the opt-in form placement.

By testing only one element at a time, you can connect changes to your conversion rate to a specific element.

The only problem with this is that if you don’t have a big budget for driving a lot of traffic, it can take a really long time to make multiple optimizations.

You test one thing for three months, get a 5% improvement in conversion. And then test something else for three months. So on and so forth.

It’s tedious.

That’s why, if you have low bandwidth or budget for split testing, you might just consider making multiple changes to your variant during a single test.

This will require you to care less about which specific element impacts conversion rate and more about the impact itself. It’s a method by which you can consistently work to improve the conversion rate of your marketing assets without being so scientific about it.

There are pros and cons to both approaches.

6. Eliminate Distracting Variables:

Whether you’ve chosen the purist approach (only changing a single element) or the generalist approach (changing many elements), there are still some things that need to remain consistent for your split testing results to deliver meaningful data.

Specifically…

- Traffic Source

- Advertisement

- Load Speed

… and other factors that aren’t relevant to your hypothesis or what you’re trying to test.

The problem with having two different advertisements, for example, driving traffic to two different landing pages, is that you can’t tell whether the ad or landing page impacts the conversion rate.

One ad–landing page pair may perform better than the other…but it’s still comparing apples to oranges.

Basically, you don’t learn anything, and you can’t use the results to inform any advertising decisions.

Unless you use multivariate testing… but that’s another blog post.

7. Test Your Test:

Before you run your test, make sure everything is working correctly.

Visit the specified URL a few times to ensure that the software you’re using randomly drives you to either the control or the variant. Also, convert on both pages a couple of times to make sure the software is tracking and reporting correctly.

8. Drive Traffic:

Assuming that everything is working correctly, now it’s time to inject your test with a healthy dose of traffic. Use your estimated budget from Step 4.

And make sure that all the traffic is coming from the same source, with the same targeting and advertisements.

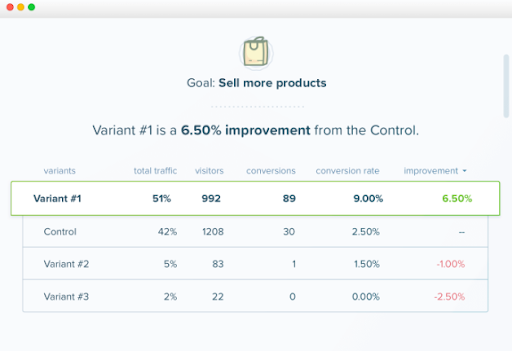

9. Analyze Results:

How’d your test perform?

Was your hypothesis right?

Are your results statistically significant?

Those are the types of questions to ask yourself when you’re examining the results. If things went well and there’s a clear winner, congratulations!

You can now make the winner your new control and let it be, or keep testing and iterating.

If things didn’t go so well and there’s no clear winner, or your results aren’t statistically significant, don’t get discouraged. That happens (more than you might believe).

Just determine what went wrong and try again — sometimes testing needs testing.

Conclusion: Split Testing

The value of split testing lies in its ability to decentralize optimization.

You don’t have to just hope that a marketing strategy that works for your competitors will work for your own business — you can test it.

You don’t have to rely on the whims and wishes of marketers and salespeople — you can test it.

And now you have a starting place.

Happy testing!

SUBSCRIBE NOW

JOIN OUR MAILING LIST AND GET LATEST UPDATES AND BLOGS IN YOUR INBOX